DBiM Insight

-

DBiM: Restructuring the Metaverse with AI Agents to Build an Autonomous Collaborative Digital Ecosystem

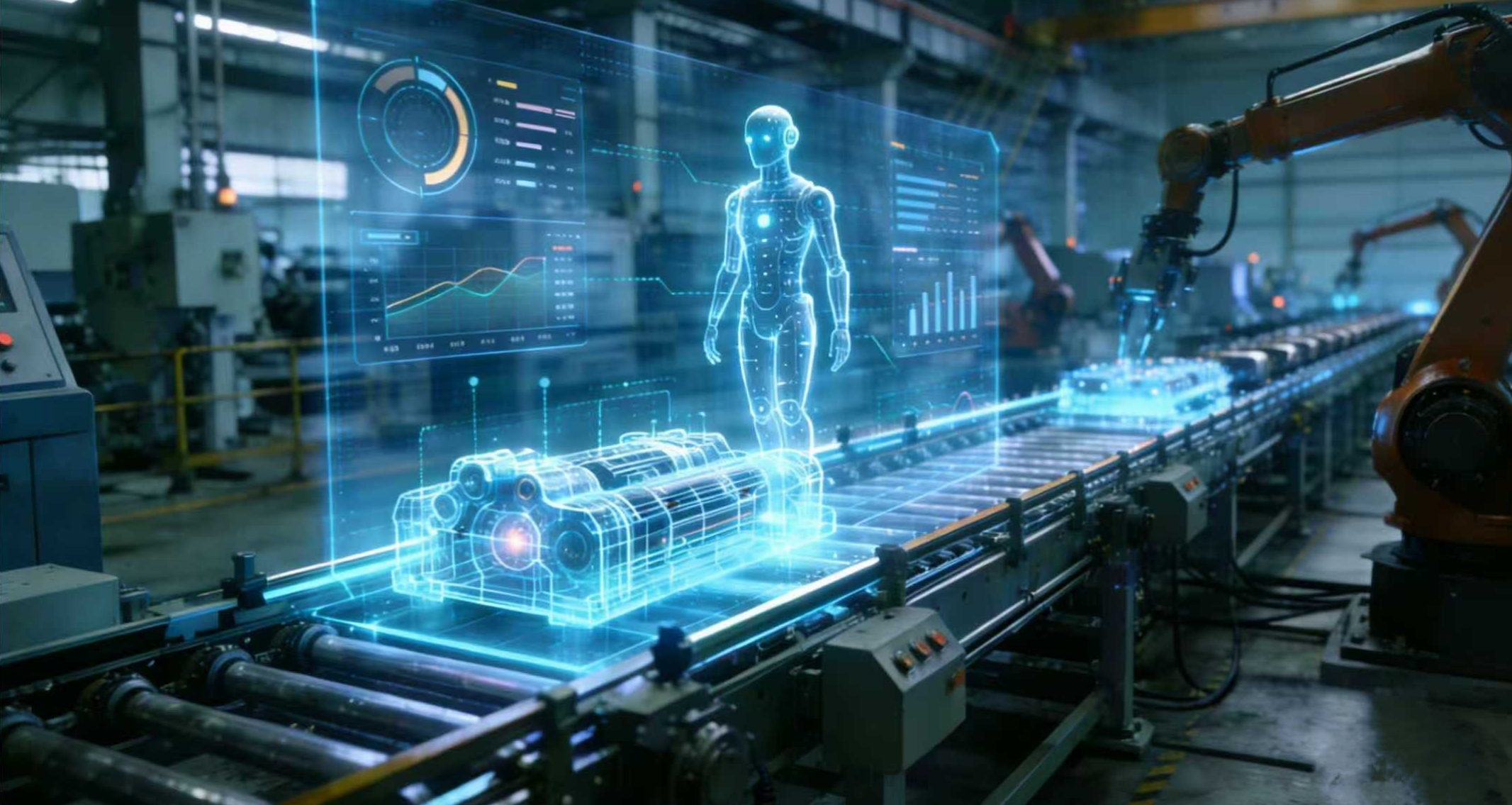

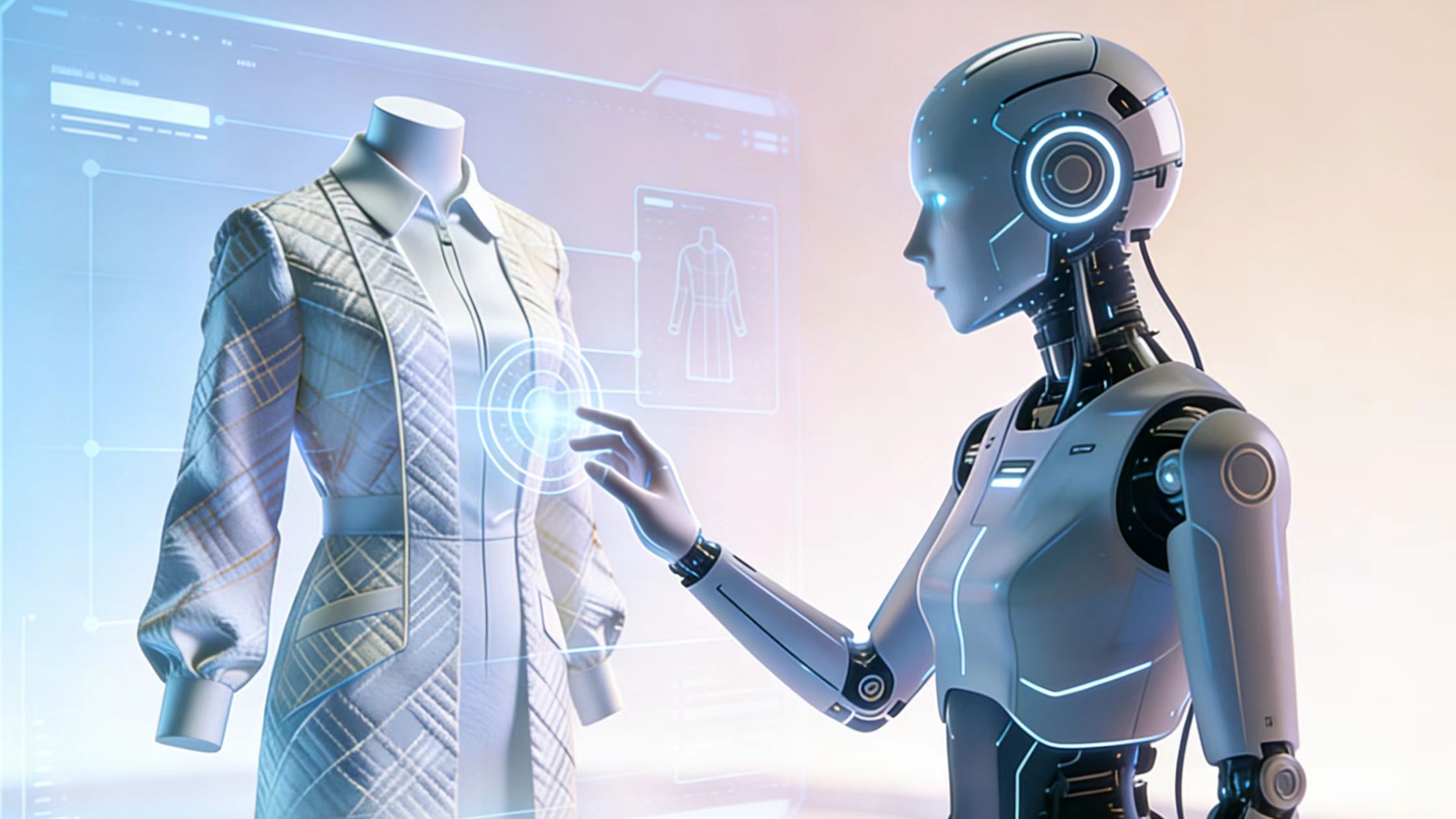

Once a distant sci-fi concept, the metaverse has evolved into a core digital innovation track, yet its potential is hindered by fragmented scenarios, inefficient workflows, and weak economic synergy. DBiM addresses these pain points by leveraging AI Agents as the core driver, building an autonomous collaborative ecosystem that integrates intelligence, connectivity, and practical value, reshaping human-digital interactions and…

-

From Hype to Utility: How the Metaverse Is Evolving Into a Business-Critical Tool

For years, the metaverse was framed as a “futuristic playground”—a realm of virtual concerts and digital avatars, more spectacle than substance. Today, that narrative is shifting: fueled by technologies like AI Agent, the metaverse is emerging as a business-critical infrastructure—one that streamlines operations, unlocks cross-domain collaboration, and bridges physical-digital value gaps. DBiM’s ecosystem, centered on intelligent autonomy and pragmatic…